When leveraging Microsoft Identity Manager (MIM) and the SharePoint Connector for User Profile Synchronization, some customers have a requirement to import profile pictures from the thumbnailPhoto attribute in Active Directory.

This post details the correct way of dealing with this scenario, whilst retaining the principle of least privilege. The configuration that follows is appropriate for all of the following deployments:

- SharePoint 2016, MIM 2016, and the MIM 2016 SharePoint Connector

- SharePoint 2013, MIM 2016, and the MIM 2016 SharePoint Connector

- SharePoint 2013, FIM 2010 R2 SP1 and the FIM 2010 R2 SharePoint Connector

* Note: you can also use MIM or FIM 2010 R2 SP1 with SharePoint 2010, although this is not officially supported by the vendor.

Before we get started it is important to understand that if the customer requirement is to be able to import basic profile properties from Active Directory with the addition of profile photos, then MIM/FIM is almost certainly the wrong choice. SharePoint’s Active Directory Import capability alongside some simple PowerShell or a console application will deliver this functionality with significantly less capital and operational cost.

However, many customers are dealing with more complicated identity synchronization requirements and thumbnailPhoto is merely one of the elements required. Due to some bizarre behaviour of SharePoint’s ProfileImportExportService web service, previous vendor guidance on this capability has been inaccurate, and indeed yours truly has provided dubious advice on this topic in the past.

Most enterprise identity synchronization deployments have stringent requirements regarding the access levels granted to the variety of accounts used. This is just as it should be, there is no credible identity subsystem which allows more privilege than necessary to get the job done. Naturally a system which is providing a “hub” of identity data should be as secure as possible. Because of this security posture, many customers have complained about the level of access “required” by the account used within the SharePoint Connector (Management Agent). In some cases, customers, have refused to deploy or used alternative means to deal with thumbnailPhoto . It’s not a little deal at all for those customers.

What is the issue?

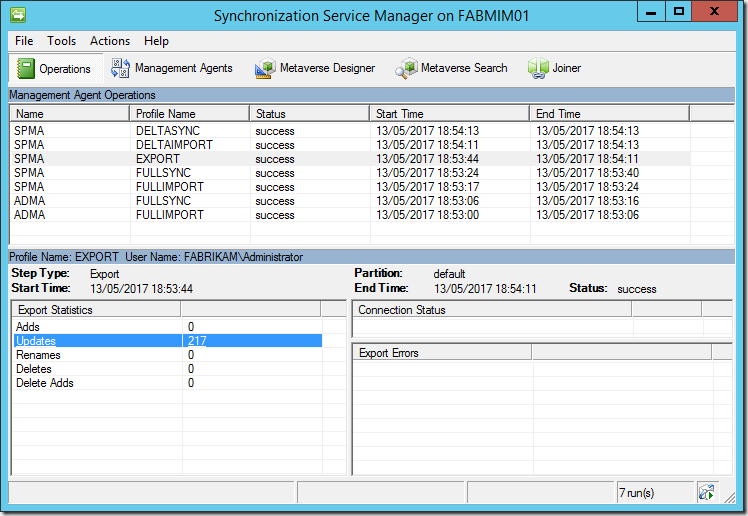

Assume that MIM Synchronization is configured using an Active Directory MA, and a SharePoint MA with the Export option for Picture Flow Direction*. The account used by the SharePoint MA is added to the SharePoint Farm Administrators group as required. We then perform an initial full synchronization. MIM Synchronization successfully exports 217 profiles to the UPA.

*Note: 218 is the Farm Administrator plus the 217 new profiles.

The Import option for Picture Flow Direction, whilst available in the UI and PowerShell, is not implemented and therefore won’t do anything.

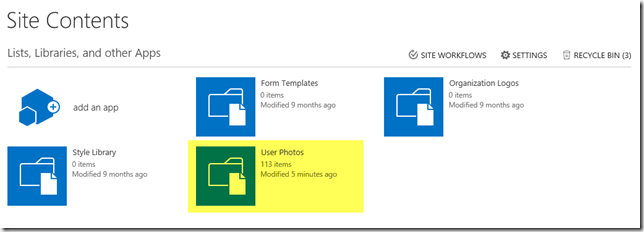

We will however notice some rather puzzling results for the profile pictures. The Profile Pictures folder is correctly created within the My Site Host Site Collection’s User Photos Library. However only some of the profile pictures will be created, in this example 112 of them. What happened to the other 105?

The numbers will actually vary, I can run this test scenario hundreds of times (and believe me, I have!) and get different numbers each time. However, roughly half the pictures are created each time.

This is the problem which has led to incorrect guidance. It really is quite a puzzler. Obviously, some files are created, and thus logic suggests that the account which is calling the web service has the appropriate permissions. If the permissions were wrong, surely no files would be created. Alas, this is SharePoint after all, and sometimes it really isn’t worth the cycles! Bottom line there is an issue with the web service. That’s not something which can easily be resolved.

The documentation for the FIM 2010 R2 SP1 SharePoint Connector, which is the previous version of the currently shipping release, remains the best documentation available, it notes:

When you configure the management agent for SharePoint 2013, you need to specify an account that is used by the management agent to connect to the SharePoint 2013 central administration web site. The account must have administrative rights on SharePoint 2013 and on the computer where SharePoint 2013 is installed.

If the account doesn’t have full access to SharePoint 2013 and the local folders on the SharePoint computer, you might run into issues during, for example, an attempt to export the picture attribute.

If possible, you should use the account that was used to install SharePoint 2013.

This, to a SharePoint practitioner, is clearly poor guidance. Whilst it’s true the MA account must connect to Central Administration, that means it must be a Farm Administrator. There is no requirement for the account to have other administrative rights on the SharePoint Farm, and there is no requirement for any machine rights on any machine in the SharePoint Farm. And certainly no access to the local file system of a SharePoint server is needed. Furthermore, there is no scenario whereby the SharePoint Install account should ever be used for runtime operations of any component, anywhere, in any farm! Of course, this is material authored by the FIM folks, and there is no reason to expect them to be entirely familiar with the identity configuration of SharePoint, especially given that the topic is confusing to most SharePoint folks as well!

When I delivered the “announce” of the MIM MA at Microsoft Ignite last fall, I made a point of this issue, by stating that the Farm Account should be used by the SharePoint MA if importing from thumbnailPhoto . This is also incorrect guidance. In my defence, at the time we had worked a couple weeks to try and get to the bottom of the issue, and ran out of time before the session. Thus, to show it all working there was little choice. It’s pretty silly to do a reveal of something if the demo doesn't work.

Using the Farm Account for anything, other than the Farm is a bad idea. In this case, it’s extremely dubious, as in a real-world deployment the account’s password will need to be known by the MIM administrator. Internal security compliance of any large corporation is simply not going to accept that.

Others have suggested that the SP MA account is added to the Full Control Web Application User Policy for the My Site Host web application. Or rather that the GrantAccessToProcessIdentity() method of the web application is used, which results in the above policy. That guidance is also inherently very bad. A large number of deployments now make use of a single Web Application, and providing Full Control to the MA account to that is patently a bad idea. Furthermore, such configuration allows unfettered access to the underlying content databases (which store the user’s My Sites remember!) and provides Site Collection Administrator and Site Collection Auditor rights on the My Site host.

The Workaround

So, we don’t want to use the Install Account, we don’t want to use the Farm Account, and we don’t wish to configure an unrestricted policy.

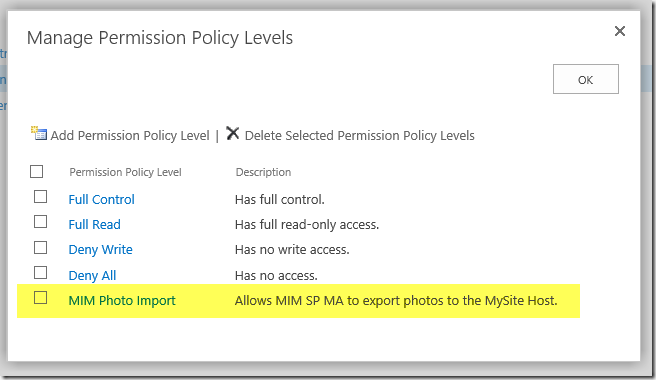

The answer to this conundrum is to configure a brand-new Permission Policy to which we will add a User Policy for the SharePoint MA account. This enables all the pictures to be created, without granting any more permissions than necessary.

The Grant Permissions for this policy are: Add Items, Edit Items, Delete Items, View Items and Open Site. No more, no less.

Then we add a new User Policy for the Web Application hosting the My Site host Site Collection, for the SharePoint MA account, with this Policy Level:

Now at this point if we perform another Full Synchronization we have a problem. As far as MIM Synchronization is concerned the previous export worked flawlessly. It thinks all the pictures are present. This is because the ProfileImportExportService didn’t report any exceptions. The failures have been lost to the great correlation ID database in the sky. Gone forever. If we search the SharePoint MA’s Connector Space within MIM, we will see the photo data present and correct. There are zero updates to make.

Of course, the idea is to correctly configure all of this before we perform the initial Full Synchronization. However, if you are following along, we can “fix” this by deleting the SharePoint MA’s Connector Space, and then performing a Full Synchronization. This will force an fresh Export to SharePoint (there is no need to delete the AD Connector Space).

Once the Full Synchronization has completed, we will see the correct number of items within the User Photos Library (one item is the Profile Pictures folder, the other 217 are images).

Of course, we would also need to run Update-SPProfilePhotoStore at this point to generate the three images for each profile, and delete the initial data (the files with GUIDs for filenames).

But wait, there is more!

As you may be aware the UPA does not fully understand Claims identifiers for internal access control. This is why we must enter Windows Classic style identifiers for UPA permissions and administrators.

Whilst we can create the new Permission Policy with Central Administration, we cannot create a new User Policy using a Windows Classic identifier. Whatever we enter in the UI will be transformed to a claims identifier. For this to work the policy must be as shown in the screenshot above (FABRIKAM\spma) – using a Classic identifier. And yes, I do feel stupid calling a NetBIOS username, “classic” but I am not in charge of naming anything :)

In order to configure the policy correctly for this use case we must use PowerShell. Which is actually just fine, because we don’t really want to be using the UI anyway. We can also combine all this work into a simple little script to create both the Permission Policy and the User Policy, as shown below.

Add-PSSnapin -Name "Microsoft.SharePoint.Powershell"

# update these vars to suit your environment

$WebAppUrl = "http://ift.tt/2qEhmbC"

$PolicyRoleName = "MIM Photo Import"

$PolicyRoleDescription = "Allows MIM SP MA to export photos to the MySite Host."

$GrantRightsMask = "ViewListItems, AddListItems, EditListItems, DeleteListItems, Open"

$SpMaAccount = "FABRIKAM\spma"

$SpMaAccountDescription = "MIM SP MA Account"

# do the work

$WebApp = Get-SPWebApplication -Identity $WebAppUrl

# Create new Permission Policy Level

$policyRole = $WebApp.PolicyRoles.Add($PolicyRoleName, $PolicyRoleDescription)

$policyRole.GrantRightsMask = $GrantRightsMask

# Create new User Policy with the specified account

$policy = $WebApp.Policies.Add($SpMaAccount, $SpMaAccountDescription)

# Configure the Permission Policy to the User Policy

$policy.PolicyRoleBindings.Add($policyRole)

# Commit

$WebApp.Update()

Summary

There you have it. How to use a least privilege account for the SharePoint MA, and successfully import thumbnailPhoto from Active Directory. In summary, the required steps are:

- Create an account in Active Directory for use by the SharePoint MA (e.g. FABRIKAM\spma)

- Add the account as a SharePoint Farm Administrator using Central Administration or PowerShell

- Create the Permission Policy and User Policy for the account using the PowerShell above

- Configure the SharePoint MA with this account, and select Export as the Picture Flow Direction. If you are using the MIMSync toolkit the thumbnailPhoto attribute flow is already taken care of. If you are not, obviously you will need to configure the necessary attribute flow

- Perform Synchronization operations

- Execute Update-SPProfilePhotoStore once Synchronization is complete to create the thumbnail images used by SharePoint

Now of course, we have added the SP MA account as a Farm Administrator. As such it could be used to do just about anything to the farm. Least privilege is always a compromise and in this case the farm administrator is a requirement of the ProfileImportExportService – a SharePoint product limitation. Therefore, this approach is the best compromise available, and one that has already been accepted by security compliance in three enterprise customers using MIM and the SharePoint Connector. The bottom line is that if you don’t trust your MIM administrators, or indeed your SharePoint ones, you’ve got bigger security problems than a couple of accounts!

Also, none of this explains why without the policy configuration or overly aggressive permissions, the web service creates some pictures but not others. But life is just too short to worry about that rabbit hole!

Finally, it is always good to remember the mantra of the SharePoint Advanced Certification programs, “just because you can, doesn’t mean you should”. This post is not intended to promote the use of MIM for just the profile photo. Furthermore, using thumbnailPhoto in AD for photos is just one approach of many. For some organisations, especially the larger ones, this would be a spectacularly stupid implementation choice, and of course in many others Active Directory is not the master source of identity anyway.

s.

by

Spence via harbar.net